Machine Learning in Non-Euclidean Spaces

A key challenge in data science is the identification of geometric structure in high-dimensional data. Such structural understanding is of great value for designing efficient algorithms for optimization and machine learning. Classically, the structure of data has been studied under an Euclidean assumption. The simplicity of vector spaces and the wide range of well-studied tools and algorithms that assume such structure make this a natural approach. However, recently, it has been recognized that Euclidean spaces do not necessary allow for the most ‘natural’ representation, at least not in the low-dimensional regime.

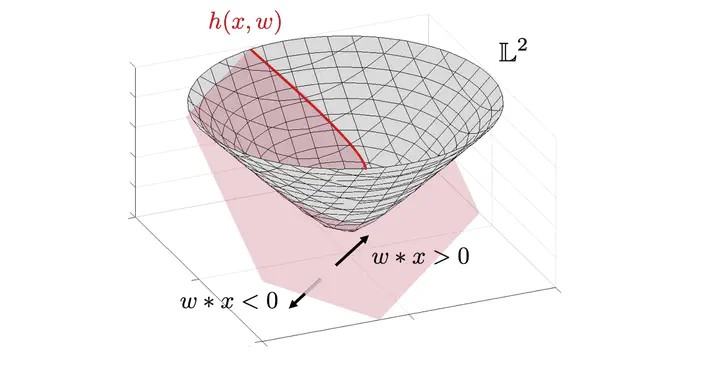

This project studies algorithms for machine learning in non-Euclidean spaces through the lens of differential geometry. I am particularly interested in learning representations which reflect the intrinsic geometry of non-Euclidean data and in understanding the impact of performing machine learning task in the space that is most natural for the data.

Publications

- M. Weber, M. Zaheer, A. Singh Rawat, A. Menon, S. Kumar: Robust large-margin learning in hyperbolic space. NeurIPS 2020

- M. Weber: Neighborhood Growth Determines Geometric Priors for Relational Representation Learning. AISTATS 2020

- M. Weber, M. Nickel: Curvature and Representation Learning: Identifying Embedding Spaces for Relational Data. NeurIPS Relational Representation Learning 2018